The 2015 Sea Ice Outlook (SIO) Post-Season Report was developed by the SIO Action Team, led by Julienne Stroeve and Walter Meier with input from the Sea Ice Prediction Network (SIPN) Leadership Team and with feedback following circulation of the draft report to the SIPN mailing list.

Introduction

We appreciate the contributions by all participants and reviewers in the 2015 Sea Ice Prediction Network (SIPN) Sea Ice Outlook (SIO). The SIO, a contribution to the Study of Environmental Arctic Change (SEARCH), provides a forum for researchers and others to contribute their understanding of the state and evolution of Arctic sea ice, with a focus on forecasts of the Arctic summer sea ice minima, to the wider Arctic community. The SIO is not a formal forecast, nor is it intended as a replacement for existing forecasting efforts or centers with operational responsibility. Additional background material about the Outlook effort can be found on the background page. With funding support provided by a number of different agencies, the SIPN project provides additional resources and a forum for discussion and synthesis. This year we received a total of 105 submissions from June to August, with 31 individual submissions focused on pan-Arctic conditions for June, 34 for July, and 37 for August. We also had one regional submission in June, one in July, and one in August.

Review of Arctic 2015 Summer Conditions

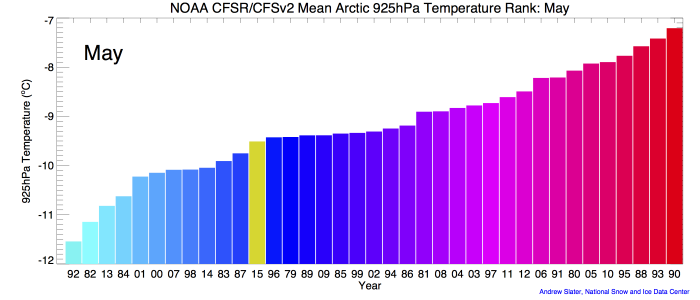

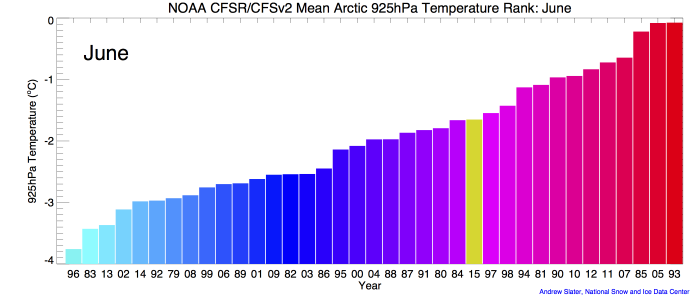

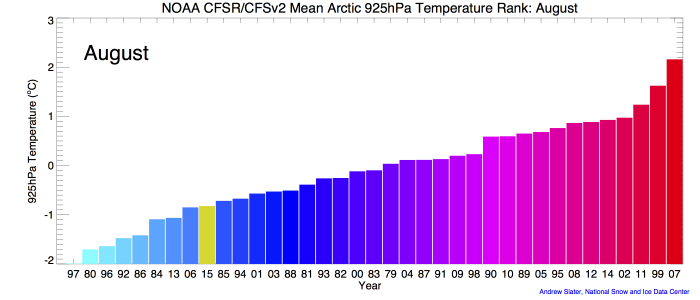

The summer sea ice retreat began earlier than normal with the maximum (winter) extent occurring about two weeks early on 25 February (See: NSIDC Arctic Sea Ice News & Analysis, 19 March 2015). It was also the lowest maximum extent in the satellite record. However, the melt season overall got off to a relatively slow start. May and June temperatures were near or below average (Figure 1) and extent declined at average or even below average rates through the end of June. In particular, melt started later than normal in Baffin Bay and ice lingered longer than usual into the summer. However, in some regions warm conditions were experienced. For example, Barrow, Alaska experienced its warmest May on record and snow disappeared by May 28, the second earliest date since records began 73 years ago. This led to surface melt beginning a month earlier than normal in the southern Beaufort Sea. The Kara Sea also experienced early melt onset. Sea ice in both the Beaufort and Kara regions opened up relatively early (See: NSIDC Arctic Sea Ice News & Analysis, 5 August 2015.).

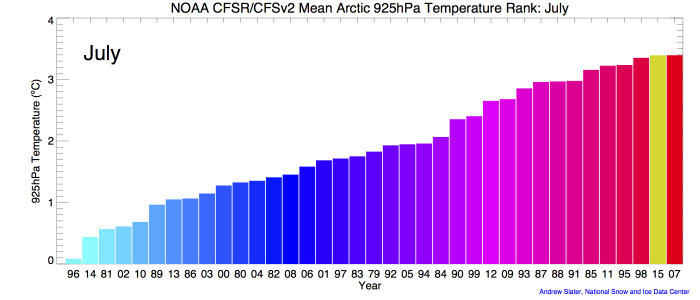

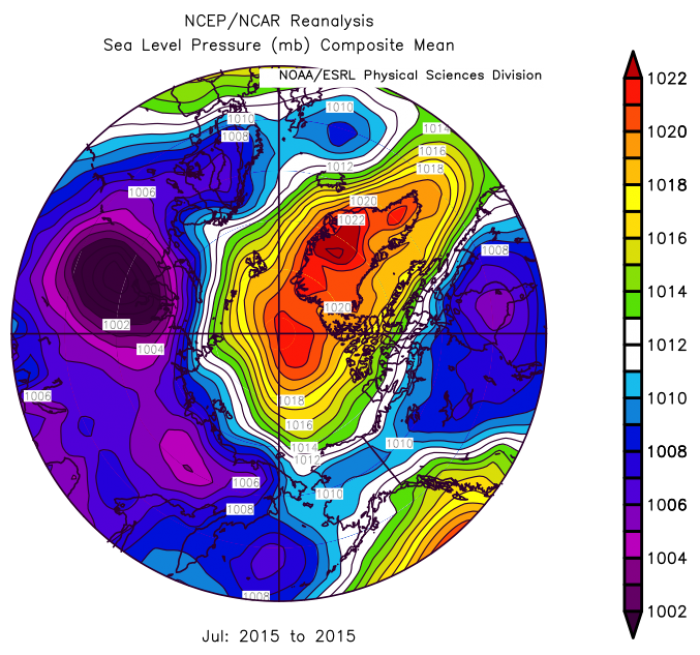

Following the relatively cool May and June, the Arctic experienced one of the warmest Julys on record, which led to rapid ice loss through the month. The warm July was likely related to higher than normal sea level pressure over the central Arctic during the month (Figure 2), which indicates relatively clearer skies (and more incoming solar radiation). The high pressure over the Arctic was accompanied by lower pressure over northern Eurasia. This pattern helps funnel warm air into the Arctic from the south and compact sea ice into a smaller area. A very similar pattern characterized much of the 2007 summer. The rapid ice loss continued well into August and for a brief time it appeared that 2015 might surpass 2012's record low extent. However, extent loss slowed considerably in late August and into September.

The year began with considerable multi-year ice in the Beaufort and Chukchi seas, as indicated by sea ice age data (See: NSIDC Arctic Sea Ice News & Analysis, 6 October 2015). However, much of the older ice in this region melted over the summer, similar to many summers over the past decade.

Pan-Arctic Overview

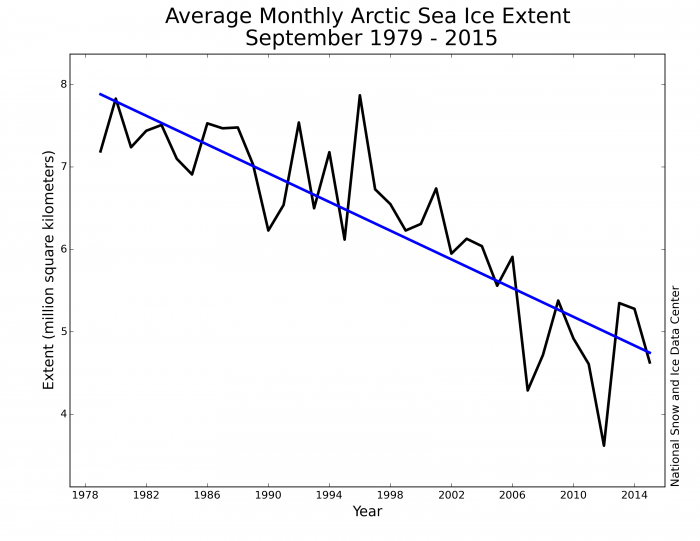

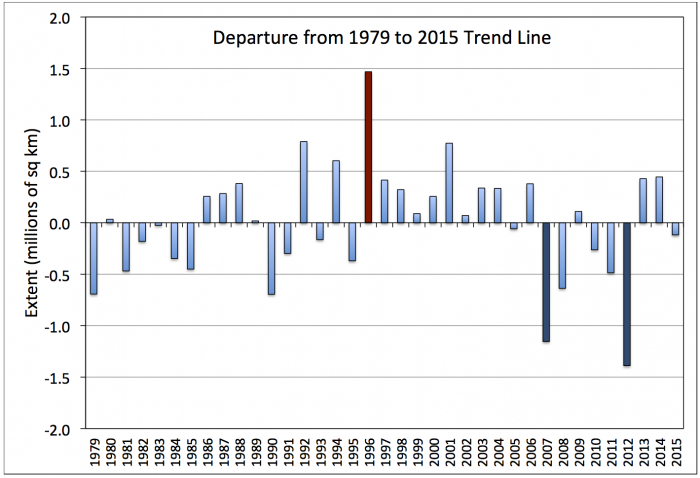

The September 2015 Arctic sea ice extent was 4.63 million square kilometers, based on the processing of passive microwave satellite data using the [NASA Team algorithm, as used by the National Snow and Ice Data Center (NSIDC). This is the fourth lowest September ice extent observed in the modern satellite record, which started in 1979 (Figure 3). It is 1.87 million square kilometers lower than the 30-year average for 1981-2010, 1.01 million square kilometers greater than the current record low September ice extent observed in 2012, and statistically indistinguishable from 2011, which was the third lowest year on record (see Figure 3).

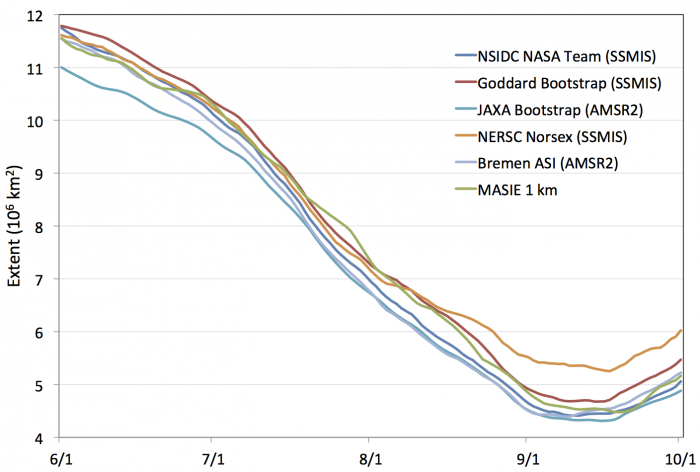

While the SIO uses the monthly NSIDC sea ice extent estimate (based on the NASA Team algorithm) as its primary reference, there are several additional algorithms that have been developed over the years to derive sea ice concentration and extent from passive microwave sensors. These various algorithms employ different methods and combinations of microwave frequencies and polarizations (i.e., the orientation the waves oscillate). While no single algorithm has been found to be clearly superior in all situations, the algorithms show differences in their sensitivity to various ice characteristics (ice thickness, snow cover, surface melt) and the surrounding environment (the atmosphere), resulting in differences in the estimated ice concentration and extent. In addition, the algorithms may use different source data and employ different processing and quality-control methods. One significant factor appears to be due to the use different land masks, which can potentially account for a lot of the bias between products. The land mask used may be influenced by the source data's spatial resolution. For example, products based on data from the JAXA AMSR2 sensor have higher spatial resolution than those using the DMSP SSMIS sensor, and thus have a great potential precision in locating the coastline. The higher resolution also affects the precision of the ice edge, which also contributes to biases between products. Note that resolution and land mask issues may further impact how well the SIO forecasts match with the observed extent as different methods to forecast the sea ice extent also employ different spatial resolutions and land masks.

The range of the algorithms provides an indication of uncertainty in the observed extent. To augment the NSIDC value, we provide estimates below from selected other algorithms that are readily available online (thanks to G. Heygster, Univ. Bremen, for providing Bremen ASI values; and J. Comiso and R. Gersten, NASA Goddard, for providing Bootstrap values).

The trajectories of daily ice extent estimated using various ice concentration datasets are shown in Figure 4. This highlights the variation in ice extent and the estimated date of the summer minimum found across these different observational products. All but NERSC's Arctic-ROOS algorithm are generally within ~0.4 x 106 square kilometers and the range of monthly and daily extent after removing the highest and lowest outliers is 0.31 and 0.29 x 106 square kilometers respectively (Table 1).

| Product | Sep Avg | Min Daily | Source Website |

|---|---|---|---|

| NSIDC SII* | 4.63 | 4.41 | http://nsidc.org/data/seaice_index/ |

| NSIDC NT | 4.57 | 4.41 | http://nsidc.org/data/seaice_index/ |

| GSFC BT | 4.88 | 4.68 | http://neptune.gsfc.nasa.gov/csb/ |

| JAXA BT | 4.47 | 4.31 | https://ads.nipr.ac.jp/vishop/vishop-extent.html?N |

| NERSC Norsex Arctic-ROOS | 5.47 | 5.25 | http://arctic-roos.org/observations/ |

| Bremen | 4.63 | 4.48 | http://www.iup.uni-bremen.de:8084/amsr2/ |

| MASIE | 4.66 | 4.48 | http://nsidc.org/data/masie/ |

| Avg (all) | 4.75 | 4.57 | |

| Avg (-outliers) | 4.67 | 4.49 | |

| Range (-outliers) | 0.31 | 0.29 |

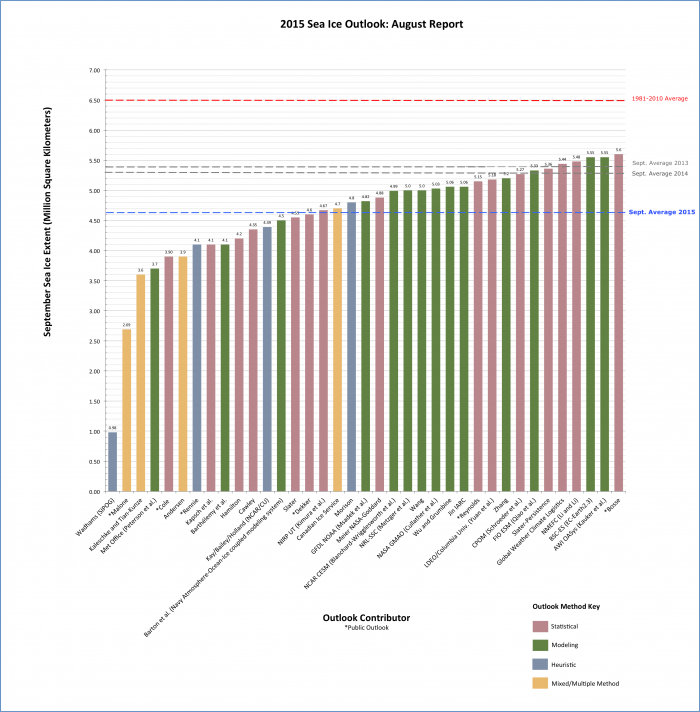

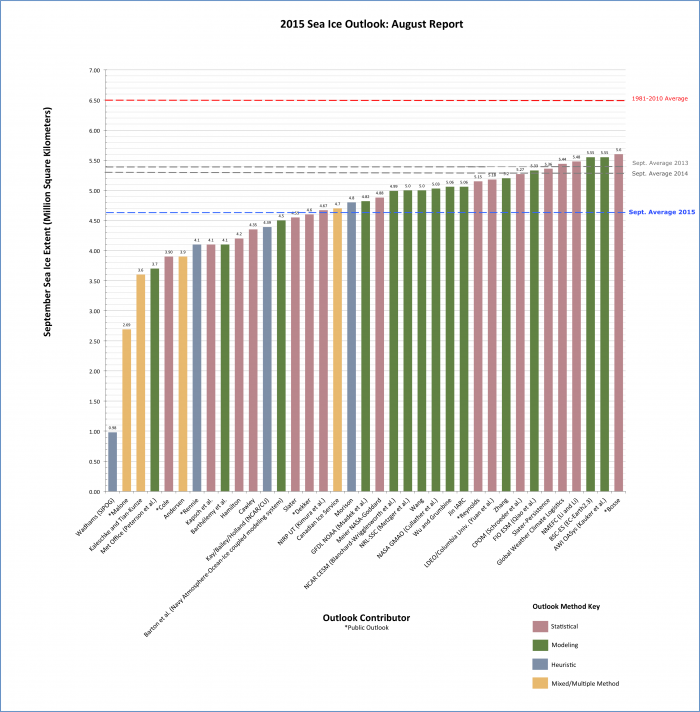

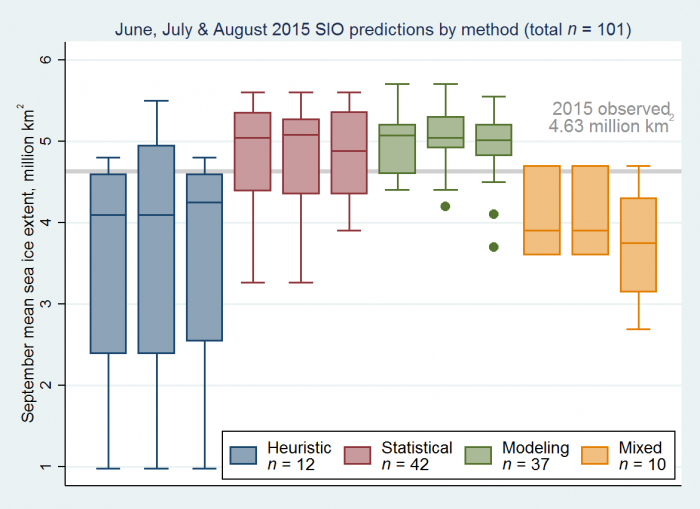

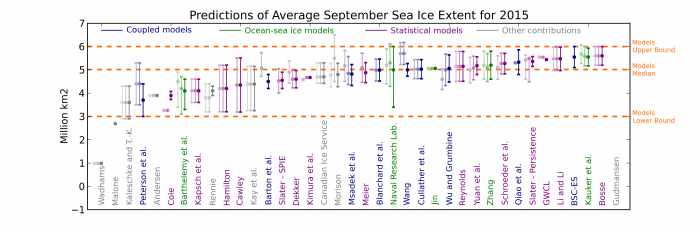

There were 37 pan-Arctic Outlook contributions in August, covering a range of different methods: (1) statistical methods that rely on historical relationships between extent and other physical parameters, (2) dynamical models, (3) and heuristic methods such as naïve predictions based on trends or other subjective information. The median August estimate was 4.8 million square kilometers, with a quartile range of 4.2 to 5.2 million square kilometers (Figure 5). This was a small downward adjustment from June and July (5.0, quartile range: 4.4-5.2) and represents an improvement in the bias of the median estimate relative to the observed extent, but no improvement in the range of predictions. Usually improvements in the bias and range are expected if the forecast period, known as "lead-time", shortens and information from June and July can be incorporated in later forecasts. However, we note that many participants submit the same prediction in each month, which limits the possibility of forecast improvement from June to August.

Download a high-resolution version of Figure 5.

The median Outlooks performed reasonable well relative to standard baseline metrics of persistence (i.e., use the 2014 observed value to estimate 2015) and trend (extrapolate the observed 1979-2014 trend line to estimate 2015). The median of 4.8 million square kilometers was significantly lower than the persistence value of 5.28 million square kilometers, but was roughly the same as the extrapolated trend estimate of 4.76 million square kilometers. As noted in Stroeve et al. (2014)1, the Outlook predictions tend to better match the observations when the observed extent is near the trend line (Figure 6). Thus the good performance this year does not necessarily indicate an improvement in skill but rather an easier year to predict as it coincides with the trend line.

Splitting the contributions by method indicates little improvement in predictions at shorter lead-time (Figure 7). Comparing the evolution of the distributions from June to August, the heuristic and statistical methods reduced their bias and range, while the models changed only a small amount and the contributions from the mixed methods actually diverged in bias and range. The small model change may be due to most models not updating their submission in July and August, which is especially costly for models. The poor performance of the mixed method may be simply due to the low number of submissions (only 10 total over the three months).

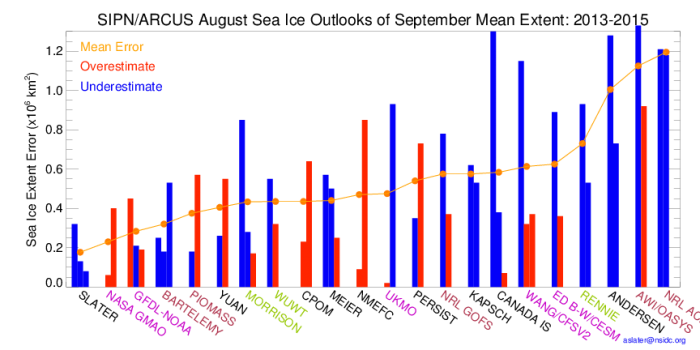

The continual evolution of forecasting systems and the lack of a complete set of forecast submissions (both this year and in the past) make it difficult to draw generalizations. There is a substantial range of the submissions within each of the above-mentioned Outlook methods, perhaps because the differences in the details of techniques within each group can be considerable. However, some individual forecasts/projections tend to be more consistent than others, at least within the past few years (when the number of contributions has increased; see Figure 8 below). While the top four performers of pan-Arctic estimates submitted in August 2015 were statistical methods (See Figure 5), if we consider corrections based on past performance (see Figure 8), the four best modeling efforts would have provided better performance than the four top statistical methods. Note also that the top five performers in Figure 8 also make forecasts on the local-scale, rather than prediction solely of pan-Arctic extent, and thus offer far more potential information. However, as has been stated our analysis thus far is incomplete and limited to pan-Arctic predictions. In the final section of this Report, we discuss recommendations for expanding the SIO with submissions year round and more detailed analysis.

Summary of Local-Scale Analysis

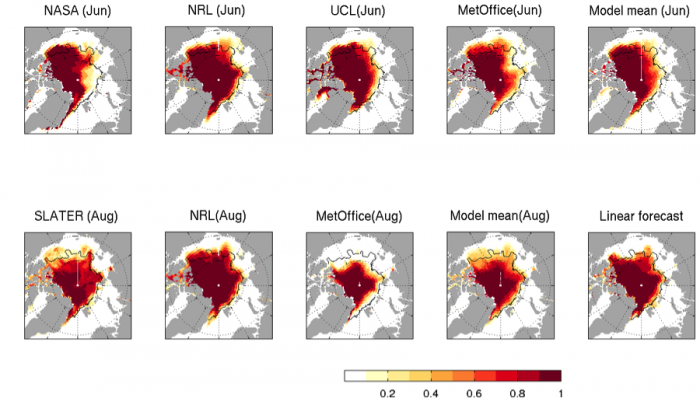

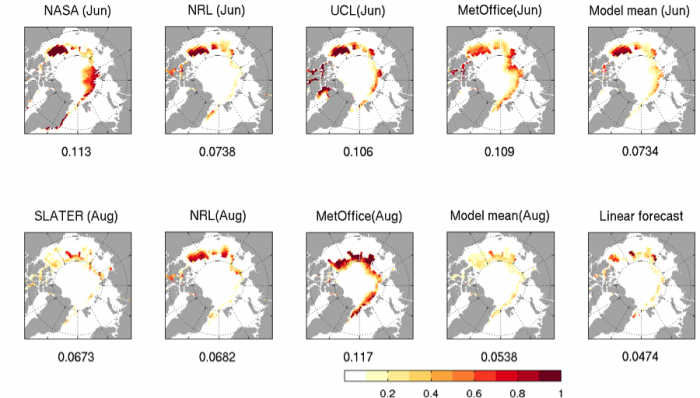

A number of groups submitted predictions at the local-scale for sea ice extent probability (SIP), four for the June Outlook (NASA, NRL, UCL and MetOffice), two for the July Outlook (NCAR, NRL), and three for the August Outlook (Slater, NRL and MetOffice). All models apart from Slater are dynamical models, whereas Slater is a statistical model. Below in Figure 9 we show the forecasted SIPs with the observed September 2015 sea ice edge and in Figure 10 the Brier scores (a metric constructed so that 0 indicates a perfect forecast and 1 indicates an erroneous, or zero skill, forecast) from each forecast and the multi-model mean. We also show the SIP and Brier score from a statistical forecast obtained by extending the 1979-2014 linear trend at each grid point.

In both plots, we break down the forecast submissions into the earliest (June Outlook) and latest (August Outlook) forecasts. From visual inspection of Figure 9, an improvement in August forecast SIPs relative to June forecast SIPs can be discerned. The model-mean June SIP forecast shows very high SIP in the Beaufort Sea and very low SIP in the Laptev/Kara, which are partly corrected in the model-mean August SIP forecast. The improvement in the model-mean August SIP forecast is confirmed by the Brier scores in Figure 10. Another interesting result is that the multi-model mean forecast for each monthly report is more accurate than all individual model forecasts for the same month. As in 2014, the August statistical forecast of Slater shows a high degree of skill. We also produce a statistical linear forecast of SIP by taking the observed sea ice extent at each grid cell (1 for sea ice concentration > 0.15, 0 for sea ice concentration < 0.15), fitting a line of best fit over 1979-2014 and extrapolating the fit to 2015. This forecast shows very good skill, which is not too surprising considering that the total 2015 extent was very close to the linear trend line (see Figure 3).

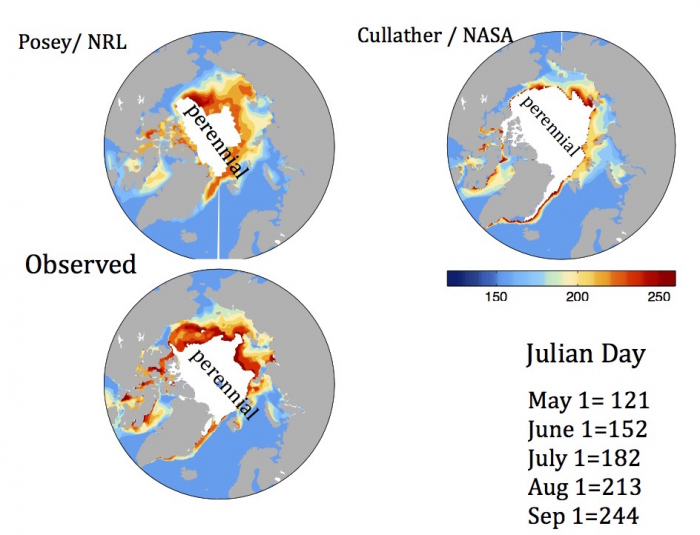

We also received forecasts of Ice Free Dates (IFDs)—the first day of the year a grid cell becomes ice-free—from NRL and NASA GMAO. Below we show the IFDs from the June Outlook (Figure 11). While there are biases in the model forecasts, late IFDs (or even perennial ice) are forecast in the Beaufort and East Siberian seas, and early IFDs are forecast in the Laptev and Kara seas. In this region, the NRL forecast does remarkably well. In Baffin Bay, models forecast an earlier melt than observed.

Summary of Modeling Contributions

Among the 14 contributions from modeling groups for the August Outlook, nine submissions were made using fully-coupled atmosphere-ocean-(land)-sea ice models and five using ocean-sea ice models forced by atmospheric reanalysis of previous years or output from an atmospheric model.

In the 2014 SIO Post-Season Report we discussed the evolution over time (from June to August) of the model predictions and found that this ensemble was becoming more certain (collectively and for models individually) as the initialization date approached September. This pattern is less clear this year (Figure 5 and Figure 12), suggesting either that predictability associated with initial conditions was less important in 2015 than last year, or that sampling errors are large due to the small ensemble size. Either way, we note that only six groups provided three distinct Outlooks for June, July and August, making it difficult to discern common patterns.

Download a high-resolution version of Figure 12.

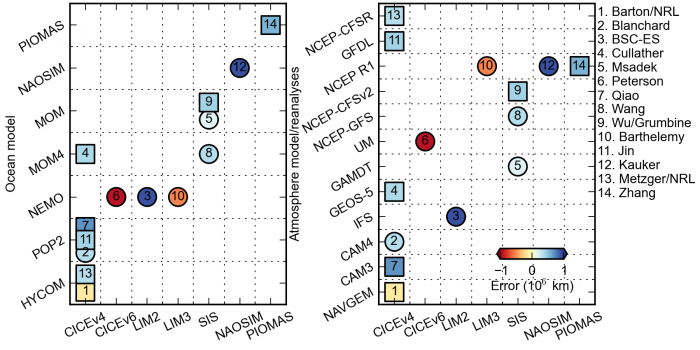

In addition to the analysis of uncertainty in predictions from each of the 14 modeling groups, we also provide, for the first time, an attempt to visually document uncertainty associated with the forecast system across the ensemble (Figure 13). The figure summarizes how forecast error depends on several parameters: sea ice model (x-axis), ocean-model (y-axis of the left panel), atmosphere model (y-axis of the right panel), the use or not of post-processing (circle=bias correction, square=no bias correction), and the type of configuration (coupled: 1-9, forced: 10-14). We don't find compelling evidence that any of these choices leads to systematically better forecasts. Bias-corrected contributions are in general closer to the actual value of 4.63 million square kilometers (4.82 million square kilometers) than raw forecasts (5.03 million square kilometers) but this improvement cannot be distinguished from noise, hence we cannot rule out that this happened by chance. Likewise, it is not clear statistically speaking whether coupled configurations would provide more accurate forecasts (4.94 million square kilometers) than forced configurations (4.98 million square kilometers), given the large standard deviations in both subsets (1.72 and 0.54 million square kilometers).

Figure 13, as it stands, reveals that the 2015 contributions sampled only a tiny fraction of the full space of possible configurations. This is because ocean and sea ice models often are developed in pairs and because the number of possible choices to make in the experimental design is comparable to the number of submissions. When considering different ocean/sea ice/atmosphere model names as they appear on the figure, the similarities these models share (i.e., equations, parameterizations and even parameter values are often the same) should be kept in mind.

We therefore encourage more groups to participate in the 2016 Outlook using new sea ice models (even very simple ones), exploring different physical parameterizations if possible, and perturbing uncertain parameter values in order to generate a spread of estimates that reflects uncertainty in the predictions. Finally, this year, only two groups (Kauker et al. and Blanchard et al.) explored the sensitivity of the results to the initial sea ice state in their Outlook submission. However, seven additional groups participated in a coordinated experiment of the 2015 Modeling Action Team experiment to investigate such sensitivity. Analysis of the results is still underway and is intended to inform methods to optimally build ensembles that truly reflect our uncertainty in forecasts. This experiment is a step towards addressing this exciting challenge in sea ice prediction.

Summary of Statistical Contributions

There were 16 contributions based on statistical models and another two that were combined statistical/heuristic. The majority of the statistical contributions, 13, were based on regression/correlation or other parametric techniques. Sea ice parameters were the common predictor amongst all but two of the statistical models and the sole predictor in 11 of the models. The specific sea ice parameter (i.e., extent, melt pond fraction, area, concentration, volume, thickness) and lead-time varied between models and is likely the reason for the large spread in predictions. Another factor is training period (time period that observations are used to build statistical methods): only 10 groups trained their model on the whole 36-year record 1979-2014. Availability of predictor data was a limitation for some groups, while a few others chose a shorter training period to improve forecast skill on the assumption that this year will likely most resemble the past 10 years and not the past 30 years.

Lessons from 2015 and Recommendations for the 2016 Outlook

There are many lessons to learn and needed developments for seasonal sea ice forecasting. This is similar to other areas of sub-seasonal to seasonal forecasting [e.g., Madden Julian Oscillation (MJO) North Atlantic Oscillation (NAO) El Nino Southern Oscillation (ENSO)], as the discipline of sea ice forecasting is relatively young. Suggestions are provided below to improve forecasting and better assess and compare contributions. Further suggestions from the community are welcome.

- Better initialization of sea ice condition, including incorporating more observational data, particularly thickness data from NASA IceBridge and ESA CryoSat-2 platforms. Both are now providing thickness fields with quick turnaround times. In addition, initialization of sea ice temperature will aid simulations of the sea ice-ocean boundary layer.

- Encourage contributions of metrics in addition to the total sea ice extent to aid in understanding the complexity of sea ice forecasting and ultimately better serve stakeholders. Examples of these metrics include local-scale coverage of the September sea ice extent probability and first date of ice-free conditions (variables we refer to as SIP and IFD). Such metrics offer value to ships operating in the Arctic that require a set amount of time to leave a region before sea ice refreezes.

- Assist SIO participants to provide expanded metrics, providing guidelines on grids to submit, or a tool to allow for easy regridding/reformatting of spatial maps.

- Continue to encourage regional outlooks. Regional outlooks, particularly in June, can elucidate anomalous conditions near the beginning of the melt season. In an effort to work toward greater stakeholder relevance, regional contributions throughout summer and even post-minimum may complement stakeholder perspectives by providing information relevant to activities in Arctic marine and coastal environments, which can require data not captured through pan- Arctic efforts, such as landfast ice and ice concentrations below 15%.

- Encourage more uncertainty information from submissions, including raw (not bias corrected) results of hindcast runs from previous years to provide a quantitative performance assessment of each method/model and their biases. There are now 37 years of observed extents for the methods to test against. Other seasonal forecasting intercomparisons, such as the NMME, require multiple years of hindcasting to better understand systematic biases within the forecasting framework, and a similar framework will aid in seasonal sea ice prediction.

- For groups running ensembles of predictions, providing distributions of raw and, if possible, bias corrected forecasts rather than only means, medians, and ranges or standard deviations. (That is, if a group runs 30 predictions using the same model, provide all 30 raw values). This makes the contributions much easier to compare with each other. This could also be a good opportunity to test how many ensemble members are needed to estimate reasonable statistics.

- A funded effort among the modeling groups to constrain experiments using the same initialization fields (extent, concentration, thickness), and atmospheric and oceanic forcing would yield simulations that better facilitate intercomparisons of model physics. Such an effort can build upon the 2015 Modeling Action Team experiment, but with funding to permit greater participation.

- An understanding/definition of the differences between modeled and observed sea ice concentrations will aid in a better comparison between models and observations.

- A comparison of statistical methods using common metrics for evaluating skill and uncertainty. The majority of the statistical submissions are based on regression/correlation or other parametric methods; with 37 years available for training, an overall assessment of the skill of statistical techniques and the relative strength of predictors is possible.

- A more systematic approach to forecasting evaluation, with year round forecasts and using metrics that also evaluate the local-scale, is needed if we are to improve seasonal sea ice forecasts. The SIPN leadership team is planning to address these needs soon.

References

*1 Stroeve, J., L. C. Hamilton, C. M. Bitz and E. Blanchard-Wrigglesworth. 2014. Predicting September sea ice: Ensemble skill of the SEARCH Sea Ice Outlook 2008-2013. Geophysical Research Letters 41(7): 2,411-2,418, doi:10.1002/2014GL059388.